code release and redundancy

Message boards : Number crunching : code release and redundancy

Previous · 1 · 2 · 3 · 4 · 5 · 6 . . . 8 · Next

| Author | Message |

|---|---|

ecafkid ecafkidSend message Joined: 5 Oct 05 Posts: 40 Credit: 15,177,319 RAC: 0 |

Credits seem to be awfully important to some people. I thought this was volunteer and we were doing this because we believed in what the project was doing. I didn't know this was a competition. Wow what a competitive group. Must be true. You said so. LOL |

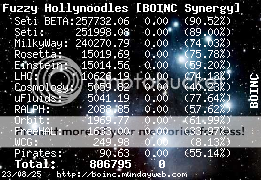

Fuzzy Hollynoodles Fuzzy HollynoodlesSend message Joined: 7 Oct 05 Posts: 234 Credit: 15,020 RAC: 0 |

It is true! Yes, people can be in these projects "for the science", and for other reasons, but not getting something of some kind in return is IMHO too cheap, and it's illusory to think that people will donate time and money without getting something back. Just look at the discussions about the money donations and the green star over at Seti and how it evolved into suggestions about stars of different colors for donations of other kind than money! That's a good example of how a symbol of a donation can be the subject of endless discussions. But no matter how people might look at donations of any kind, most people want some kind of acknowledgement, not matter how good the intentions are. Here, where I live, donations of blood are not paid, but the blooddonors get a pin and other kind of symbolic gifts to signal to other people, that they donate blood! The same here in the BOINC projects, we get credit for work done, and you can also see the User of the Day function as a kind of aknowledgement, as you don't need any credit to become UOTD, all you need is an account and a profile! But none the less, people feel it as an honour, when they become UOTD! Myself, I don't crunch "for the science", I crunch because I'm interested in the developing of the DC technology, and BOINC is an eminent example of that. I don't overclock my computer, I don't compile my own client, I don't fiddle about with other things to get higher credits, and the credit is for me an added bonus for participating in these projects, but I will certainly have the credit, my computer has deserved for the cycles, it has produced! So when I find out that other people fiddle about with things to claim higher credit than they really deserve, I'm very upset about that! And if the people behind the projects won't do anything to prevent this from happening, I'll donate my cycles to projects where they do care to do something to prevent this! [b]"I'm trying to maintain a shred of dignity in this world." - Me[/b]

|

|

AnRM Send message Joined: 18 Sep 05 Posts: 123 Credit: 1,355,486 RAC: 0 |

/quote.... but I will certainly have the credit, my computer has deserved for the cycles, it has produced! So when I find out that other people fiddle about with things to claim higher credit than they really deserve, I'm very upset about that! And if the people behind the projects won't do anything to prevent this from happening, I'll donate my cycles to projects where they do care to do something to prevent this! [/quote >Right on, Fuzzy......Cheers, Rog. |

Biggles BigglesSend message Joined: 22 Sep 05 Posts: 49 Credit: 102,114 RAC: 0 |

I'm here for both the science AND the stats. With regards to redundancy, I find it difficult to have much faith in unverified results. How much important data do you write off due to unstable machines before you consider it worth having redundancy? How do we know we haven't already missed the most important result because it got corrupted? The other boon to having redundancy is that it partially minimises the effects of credit cheats or "tweakers". As for releasing the source code, I think you should in a limited way. By that I mean you should release the actual scientific processing code, so that programmers out there can optimise it if possible. Let them compile it and test it and in the event that it is better, have them submit it to you. But don't give credit for that. When you are happy that it is a valid improvement, add some security/verification code that doesn't get released to the public to the improved code and release it as the standard client. This way people can contribute, but if they use the source to manipulate and fake results, they don't get credit for it. And if they come up with valid improvements, everybody benefits. Hope that was clear enough. |

|

Aegion Send message Joined: 14 Oct 05 Posts: 12 Credit: 3,374,900 RAC: 0 |

I'm here for both the science AND the stats. I suspect you like a few other individuals in this thread haven't thought through the full ramifications of how the science of the project relates to these threats. This project is not like a encrytion breaking effort where every computer gets assigned a different key or Seti where unless redundant units are given, everyone ends up with a different data point and a screwed up result could mean missing a sign of intelligence life being out there. The goal of the project is to predict the outcome of protein folding with regard's to an amino acid chain's shape. Ultimately its only the most accurate result, as long as it can be accurately detected and recongized, that really matters for this project. Theoretically you could have thousands of increadibly innacurate results and only one that happens to predict the protein folding shape quite accurately, and the project could be considered spectacularly sucessfull. Now in practice if everyone but one person is getting horribly innacurate predictions it suggests that something should be done so that more people are coming closer, increasing the odds that one will get lucky and be extremely accurate. Having said that, its ultimately how accurate the most accurate result is that determines whether this distributed folding project can be considered successfuly or not. What this means is that from a scientific standpoint if 5% of the participants in those projects are cheating and producing entirely bogus results, that only means that the project loses 5% of the participants that could have otherwise chosen to productively crunch for them. By contrast redundant units for each person means a loss of 50% in effective computing power! The only concern from a scientific standpoint is that a participant's cheating somehow will trick the server side software into thinking that a badly off result is actually extremely accurate. However since the top predicted results for a particular protein should get manually examined by a scientist involved with the project it is VERY unlikely that they can trick trained scientist, so no actual damage to the science of the project would be done. The only risk for the science would be if such a large portion of the participants started cheating that it makes it tough to determine whether a tweak to the code makes the results in general be closer to being accurate. However that level of cheating is unlikely to occur. While I'm not at all convinced that cheating is such a problem in the first place, you should recognize that its not a threat to the actual science of the project. Edit: Biggles, obviously feel free to quiz me more in this thread or in TVR's thread on the Arstechnica forum if you want more info relating to the science of the project itself. |

Webmaster Yoda Webmaster YodaSend message Joined: 17 Sep 05 Posts: 161 Credit: 162,253 RAC: 0 |

I may be wrong, but my understanding is that all Rosetta work units use a random seed to begin with, at the client's end. So if the same work unit is sent to two participants, they would not return the same result anyway. They might need to send it to millions of participants to get two matching results. *** Join BOINC@Australia today *** |

|

Aegion Send message Joined: 14 Oct 05 Posts: 12 Credit: 3,374,900 RAC: 0 |

I may be wrong, but my understanding is that all Rosetta work units use a random seed to begin with, at the client's end. Based on my past knowledge gained from participication in a distributed computing project with virtually identical goals, I'm pretty certain that what your saying should be the case since it certainly is how it worked with the other project. |

David E K David E KVolunteer moderator Project administrator Project developer Project scientist Send message Joined: 1 Jul 05 Posts: 1480 Credit: 4,334,829 RAC: 0 |

We would give a specific random seed for each work unit and use homogeneous redundancy. |

|

Ingleside Send message Joined: 25 Sep 05 Posts: 107 Credit: 1,522,678 RAC: 0 |

what do people think? Well, needed redundancty in a BOINC-projects depends on two things, science and crediting. For the science, will any cheating-attempts or hardware-error always show up in end-result as "impossible" result? If no, min_quorum = 2 or higher. For crediting it's more difficult: 1; Is all wu running on the same computer giving little variation in crunch-time, like in CPDN? If yes, min_quorum = 1 and credit based on internal benchmarking. 2; Is there a limited number of wu-types, each type giving little variation in crunch-time, like in Folding@home? If yes, min_quorum = 1 and credit based on internal benchmarking. 3; Crunch-times variate a lot between wu, and can have ocassional wu finish after 1% or something. Most projects falls into this category, and there's sub-groups: a; Credit is based on BOINC-benchmark. Due to huge variations, needs min_quorum = 3. b; Application instead "counts flops", and if manages this within 1% error, only needs min_quorum = 2 if limits work-distribution to v5.2.6 or later clients. For someone trying to cheat, min_quorum >= 2 means only if atleast two users crunching the same wu cheated is it really successful. So, till a majority of users are cheating, they're being stopped by min_quorum >= 2. Now, someone will maybe ask, why can "counting flops" work with lower quorum than the BOINC-benchmark? The problem with BOINC-benchmark is it can variate very much, and some computers is always claiming very low credit. Meaning, if lowest claimed are used, many users can complain with only getting 0.1 CS or something when they themselves was claiming 10+. By using min_quorum = 3 the lowest claimed is not used for deciding credit, so this problem mostly goes away. In the method with "counting flops", if error is within 1% it means the "low" claimer is 9.9 while the other is claiming 10. This difference is so small that very few users will complain, and therefore it's good enough with min_quorum = 2. Anyway, it's possible Rosetta@home can keep using min_quorum = 1, but would not recommend it while relying on the BOINC-benchmark for crediting. Using boinc_ops_per_cpu_sec should be an improvement, and if a group of wu has mostly the same crunch-time except for ocassional 1%-result, it will maybe be possible to also check for cheaters so can keep using min_quorum = 1... Using min_quorum = 2 on the other hand will validate the science, and if uses boinc_ops_per_cpu_sec also guards against cheaters and minimize credit-variation. |

adrianxw adrianxwSend message Joined: 18 Sep 05 Posts: 662 Credit: 12,167,519 RAC: 4 |

I am a 25 year software developing veteran. Of course, I would be interested in seeing the code, but my real interest was in using "alternative" approaches to setting up initial conditions. My belief being that a seasoned data processing type would use different methods then a biochemist to close in on the best structure. To do this, I do not actually need to see the code, rather I need the executables as black boxes and an understanding of the data representations. DK in the post where the license was mentioned pointed to the "Academic" licencing of Rosetta, and this sounded exactly what I needed. Alas, the requirements of that licensing require that your "senior scientist" or whatever counter sign the documents. This is, of course, totally impossible if you are not in an academic research institution. I agree that optimised clients can be of benefit to projects, as they do simply turn round work faster. If the available computer resources are insufficient, and as DB has said in this thread, they are not, optimised clients may be the way forward. HOWEVER, the chaotic approach of Seti is not a good model. Perhaps some of the better developers would receive the code and simply submit their optimisations at the source level back to the project for incorporation into the stable code lines. How the choice of "better developers" is made is left as an exercise for the reader. Perhaps the meritocracy type structure that Apache is developed under. Wave upon wave of demented avengers march cheerfully out of obscurity into the dream. |

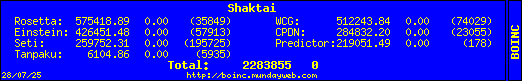

Shaktai ShaktaiSend message Joined: 21 Sep 05 Posts: 56 Credit: 575,419 RAC: 0 |

We would give a specific random seed for each work unit and use homogeneous redundancy. Hmm! Predictor uses homogeneous redundancy. Rosetta creates a random seed so that each work unit is useful, not just a duplicate of another work unit. So in effect, you are not really validating credits as you are equalizing them to deter cheating. Each work unit would still be unique, it would just need to be matched up with a similar work unit on the same platform, even though the outcomes would be different. Homogenous redundancy would also ensure that any work from manipulated apps to cheat would not get any credit. Am I understanding that correctly? If so, I might be able to buy into that.  Team MacNN - The best Macintosh team ever. |

|

Astro Send message Joined: 2 Oct 05 Posts: 987 Credit: 500,253 RAC: 0 |

I couldn't code my way out of a paper bag, so I'm stuck with what I have, and that's a feeling that: If you have even the slightest doubt if releasing the code is the right thing, then Don't do it. The second, and I mean second it's out of the bag, you'll NEVER get it back in, should you change your mind in the future for any currently unthought of reason.   |

dgnuff dgnuffSend message Joined: 1 Nov 05 Posts: 350 Credit: 24,773,605 RAC: 0 |

I agree with Janus. Projects that have been around for a while using BOINC (Seti, in particular), have already dealt with these issues. The possible benefits of releasing the code (user's can contribute in yet another way, which can directly impact the project positively such as code optimization) and using redundancy (to prevent cheating) outweigh the decrease in production, in my opinion. Also, there are a lot of talented developers out there and I wouldn't rule out the possibility of increased production from their contributions. I wouldn't go that route. One slip, and the source would be all over the place. Either release what you're going to full scale, or don't release at all. It seems that we have two requirements that are apparently at odds with each other. Firstly the desire to release the source so that people can tinker with it and make it go faster. Secondly, the need to prevent cheating, meaning either falsification of the amount of work done (and hence credit earned), but more importantly corruption of the returned data, which could severly hinder the usefulness of Rosetta. There's an old adage in the computer biz, and it's stood the test of time for as long as I can remember. "90% of the code does 10% of the work, the other 10% of the code does 90% of the work." What this means is that to satisfy the first requirement, it's not necessary to release the entirety of the Rosetta source, merely a harness that encapsulates the critical 10%. It would mean some additional work, but there is a huge hidden benefit to doing this. If you reduce the released code to a unit test harness that simply tests the underlying implementation, we (as developers) have two benefits. We can concentrate on the code that matters, and we have the harness available to verify that our optimized algorithm is conformant. There may be cases where you do need to release the full source, but these would have to be handled on a case by case basis. In the best possible world, someone might develop a port of Boinc that runs on the Xbox / PS/2 / Xbox 360 / PS/3 / insert your favorite game console here. The possibility of leveraging the power of even 1/10 of one percent of the multiple tens of millions of game consoles in the United States is not something to be ignored. |

|

Janus Send message Joined: 6 Oct 05 Posts: 7 Credit: 1,209 RAC: 0 |

I think the license sounds fine. If released along with a couple of test WUs, some result quality boundaries and 2 or 3 times redundancy it would be great. By releasing the code you also attract the portion of the crunchers that only crunch on opensource projects. |

|

j2satx Send message Joined: 17 Sep 05 Posts: 97 Credit: 3,670,592 RAC: 0 |

I think the license sounds fine. If released along with a couple of test WUs, some result quality boundaries and 2 or 3 times redundancy it would be great. By releasing the application code, you're likely to go from science to fiction. |

|

[BOINCstats] Willy Send message Joined: 24 Sep 05 Posts: 11 Credit: 3,763,250 RAC: 0 |

|

Tern TernSend message Joined: 25 Oct 05 Posts: 576 Credit: 4,701,412 RAC: 0 |

I don't know who accepted the bet, but if he/she guessed today, he/she is a winner! My bet was January. Shows how much _I_ know!

|

|

Divide Overflow Send message Joined: 17 Sep 05 Posts: 82 Credit: 921,382 RAC: 0 |

|

stephan_t stephan_tSend message Joined: 20 Oct 05 Posts: 129 Credit: 35,464 RAC: 0 |

when we get to 1,000,000 credits per day, we go to two fold redundancy and give out the code. (Anybody want to place a bet on when we break the 1,000,000 a day mark?) That's amazing. The massive boost in number of users those past few days is linked to it of course. I had bet February 06! Congratulation Rosetta! Team CFVault.com http://www.cfvault.com

|

|

Tangent Send message Joined: 17 Sep 05 Posts: 4 Credit: 18,859 RAC: 0 |

when we get to 1,000,000 credits per day, we go to two fold redundancy and give out the code. (Anybody want to place a bet on when we break the 1,000,000 a day mark?) The front page also shows over 10TFLOPs. WooHoo! |

Message boards :

Number crunching :

code release and redundancy

©2026 University of Washington

https://www.bakerlab.org