How to have the best BOINC project.

Message boards : Number crunching : How to have the best BOINC project.

| Author | Message |

|---|---|

Tern TernSend message Joined: 25 Oct 05 Posts: 576 Credit: 4,701,412 RAC: 0 |

I've attempted to put these in priority order, but naturally, they're MY priorities. Others may have different ones. Plus things I thought about 'late' wound up near the bottom because I'm too lazy to renumber. 1. Communication. If the project staff does not communicate with the participants, letting them know the goals and status of the project, the participants will feel unappreciated or unneeded, and leave. 2. Communication. If a participant reports a problem that the message board volunteers can't solve, and project staff can't be reached to investigate, the problem remains unsolved and the participant will get fed up and leave. 3. Communication. (seeing a pattern here?) If the project has a problem, and project staff puts a message on the home page or technical news or in SOME way lets people know about it, and keeps them up-to-date on expected time of a fix, then the participant will shrug and say "it happens". If nobody has a clue what's going on, participants will eventually say "that project is always messed up", and leave. (SETI, Predictor, you need to listen to this...) 4. Project support for various platforms. If your project doesn't support my CPU or OS, I can't participate. This is a balancing act; a project can't devote resources to a platform that won't contribute enough to 'recover' that cost, but the P.R. factor must be kept in mind as well. Just because there are "enough" Windows boxes around to do what a project needs done doesn't mean I will put _my_ Windows box on your project, if you don't also support Macs. I've been a Mac developer for 20 years; insult my platform, you won't get any favors from me. And "support" doesn't mean compile it once and forget it; it means keeping the various versions up-to-date with each other, at least within reason. (Too many to list don't manage this one.) 5. Have work. If the nature of the project is such that you will NOT always have work available, say so up front. (LHC does this.) If work outages are rare, but you know one is coming up, see #1-3 above. (SETI blows this one at times.) 6. Have CONSISTENT work, or estimate correctly. BOINC is designed, especially with the Result Duration Correction Factor, for work units that all take 'close' to the same length of time. A factor of even 2x between one result and the next, without a corresponding adjustment to the estimate, is too much. If you have one block of results to be released that will take 2x as long as the block before, increase the estimate - this will reduce the impact on the participants. If the nature of your work is such that you _cannot_ estimate how long a result will take, then some method should be worked out of "padding" or "truncating" the work. Example; if you normally do something five times in a result, and after three such 'blocks', the time it's taken to get this far is longer than normal, consider only doing four blocks instead of five. If after doing all five the elapsed time is considerably _shorter_ than normal, then do a sixth. (Comment... Rosetta is real marginal on this point. Thus the example chosen. :-) ) BAD example of this, from SZTAKI - the code originally was such that all results took about the same length of time. A way was found to identify early in the run that it was going to fail, so (good idea) they terminated the result at that point rather than wasting time going to completion. The bad part is (see #1-3) they didn't tell anyone they were going to do this until after the fact. And, it would have been much nicer if every result included _two_ "things to test", the second of which would only be tested if the first "failed off" early. If both failed off, the result would still be short, but this would happen less often. As it is, DCF is worthless now for SZTAKI. If the nature of your work is such that it is impossible to know how long a result is going to take, even approximately, say so up front. 7. Don't make drastic changes in WU length without notice. (Hm... related to #1-3...) If someone signs up with your project because they want/need a project with very short result times, and you suddenly make your results compete with CPDN, they'll probably leave. (SETI, are you listening?) 8. Don't make drastic changes in the science application without notice. Heck, don't make ANY changes to the science application without notice! Some people are on dial-up. They don't appreciate going along for months with every connection taking ten minutes, then one day when they're in a hurry, you send them 50MB of code. (None of the projects think about dial-up users enough.) 9. Be consistent and fair on credits. (See 'code release and redundancy' thread, I'm not going to retype that whole thing in here!) 10. Test what you're sending out. If you have a new science application, or even just a new block of work units that are a bit different, run it in-house FIRST! And not just on one machine for one result. Run two or three results, on EVERY platform you support. (Predictor... the science application for Mac for their latest new block of work wasn't even in the right place on the server and could NOT be downloaded. When they finally fixed that, it failed on every result.) 11. Have a screensaver. Make it pretty. (And see #4...) 12. Stomp trolls. The "cafe" can be pretty loose, but off-topic "flame wars" in Number Crunching or the help area is a no-no. 13. Don't stomp non-trolls. If someone hates something about your project and says so, that's their opinion. If they start saying so every 20 minutes, see #12. 14. Stay up-to-date with BOINC versions. Don't wait until V5 is running on every other project site, and V5.x becomes "recommended", before you BEGIN to work on installing V5 on your project. (SZTAKI again...) 15. Don't lie to the participants. If you say you're going to have something by "x" date, and you can't have it by then, come back and say so. Don't just let the date go by in silence. (SZTAKI...) If it's not a date, but something you're going to do, do it. If you find out that you thought you could do something but can't, explain why. If you say "you will get credit for these results", then figure out SOME way to give the credit! (SETI some, SZTAKI definitely.) ---- I'm sure I've left MANY potential problems out of this list - but I'm equally sure others will remedy that pretty quickly! :-)

|

|

Divide Overflow Send message Joined: 17 Sep 05 Posts: 82 Credit: 921,382 RAC: 0 |

|

Paul D. Buck Paul D. BuckSend message Joined: 17 Sep 05 Posts: 815 Credit: 1,812,737 RAC: 0 |

Bill, I read this and all I can say is that I don't think you stressed communication enough. In another thread, you chatted about PPAH and that got me to thinking and they are on the way off my systems ... for that dratted "C" word ... And yes, SDG and PG are also candidates to go ... BTW, EAH is also slipping ... I don't see Bruce around the NC forum much at all ... ==== edit WCG, thinking about it also has an issue with the web site not showing the BOINCers the data that we are accustomed to ... so, they may lose me even though I have not done much there ... |

Tern TernSend message Joined: 25 Oct 05 Posts: 576 Credit: 4,701,412 RAC: 0 |

KNEW I'd forgotten some... 16. Checkpoints. Have them. Frequently. Losing work is not nice. If you can't checkpoint, your app isn't a candidate for Distributed Computing. Actually, I'll be blunter - if you can't checkpoint, you need to rethink _something_, because you don't have any idea what your app is even doing. (uFluids) If you can't think of any other way to checkpoint, dump the entire variable table to disk. That's a painful way to restart, but it's a _working_ way. 17. Update the Progress indicator. If you can't get it right, guess. If it's not linear, it's a pain, but better to _move_ than to sit in one place for more than a few minutes. A progress of 0% hasn't started yet - if the status is "running", the progress better be above 0% in a few seconds (many). And a progress of 100% is finished. If there's more work to be done (SETI) then you aren't at 100%, you're at 99%. If the progress says 105%, your application is just broken (Predictor). 18. If something goes wrong, exit gracefully. Don't lock up the system and prevent any other work from getting done. (Predictor.)

|

Webmaster Yoda Webmaster YodaSend message Joined: 17 Sep 05 Posts: 161 Credit: 162,253 RAC: 0 |

19. Find a mechanism to detect endless loops. I don't like to wake up in the morning to find my server has been spinning its wheels producing nothing. Happened yesterday - two Rosetta WU still at 1% after more than 6 hours crunching (each). After restarting BOINC, both WU started from scratch and were done in an hour or two. *** Join BOINC@Australia today *** |

Jack Schonbrun Jack SchonbrunSend message Joined: 1 Nov 05 Posts: 115 Credit: 5,954 RAC: 0 |

Great list, Bill. As I'm sure you are aware, we are trying to make RAH a first rate BOINC project. But that we are also new at this, and appreciate guidance. I'm curious about the implications of point #6: 6. Have CONSISTENT work, or estimate correctly. BOINC is designed, especially with the Result Duration Correction Factor, for work units that all take 'close' to the same length of time... What are the actual problems caused by variable length Work Units? |

Webmaster Yoda Webmaster YodaSend message Joined: 17 Sep 05 Posts: 161 Credit: 162,253 RAC: 0 |

What are the actual problems caused by variable length Work Units? It's related to work cache. Put simply, if the estimate is for a WU to take an hour to do and it downloads a day's work, it might download 24 work units. If however they end up taking 5 hours each, it may run into trouble with deadlines (especially on systems that run more than one project). Oh, and Rosetta is already a first rate BOINC project, but there's always room for improvement :-) *** Join BOINC@Australia today *** |

Webmaster Yoda Webmaster YodaSend message Joined: 17 Sep 05 Posts: 161 Credit: 162,253 RAC: 0 |

The converse is also true - if estimates are much higher than actual time, BOINC could keep running out of work. *** Join BOINC@Australia today *** |

Jack Schonbrun Jack SchonbrunSend message Joined: 1 Nov 05 Posts: 115 Credit: 5,954 RAC: 0 |

19. Find a mechanism to detect endless loops. I don't like to wake up in the morning to find my server has been spinning its wheels producing nothing. Happened yesterday - two Rosetta WU still at 1% after more than 6 hours crunching (each). After restarting BOINC, both WU started from scratch and were done in an hour or two. One of the interesting consequences of distributed computing is that you find rare bugs in your code. (Or maybe not so rare, since a number of people have reported something similar on the last batch of Work Units.) We appreciate your patience as we work towards making our code as bulletproof as possible. |

Tern TernSend message Joined: 25 Oct 05 Posts: 576 Credit: 4,701,412 RAC: 0 |

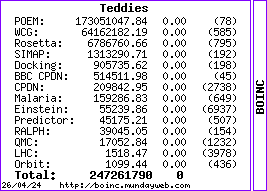

We appreciate your patience as we work towards making our code as bulletproof as possible. Jack, your presence in this discussion illustrates my #'s 1 through 3. As long as you do that, we'll let you slide on the occasional issue with # 19. :-) And Yoda had it exactly right on #6. It used to be very important for a project to get the _estimate_ right; now that is less important, as we have the DCF, but one "long" result can mess up the DCF and it will take ten "shorter" ones to get it right again. And in case ANY of what I wrote is interpreted by anyone as being "anti-Rosetta", let me put up my "grades" for the six projects I have personal experience with... Einstein - A (but a couple of low grades on communication recently, so watch out!) Rosetta - A (probably actually an A- right now, but gets points for effort, WILL be better yet) CPDN - B (partly incomplete, I haven't been there long) SETI - C Predictor - D SZTAKI - F

|

|

John McLeod VII Send message Joined: 17 Sep 05 Posts: 108 Credit: 195,137 RAC: 0 |

Consistent work lengths are not really a requirement. Fairly consistent estimates are. If there are any parameters (for example the # of atoms or other elements in the protein) that affect the run time of a result in a reasonably consistent fasion, please make the estimated times depend upon these in some fasion. Note that LHC does this - they have 3 types of results that take factors of 10 difference in time - 1,000,000, 100,000 and 10,000 turn results. Please do not pad the work as that wastes CPU time, and the host could have some other result - possibly from some other project - to work on.   BOINC WIKI |

Tern TernSend message Joined: 25 Oct 05 Posts: 576 Credit: 4,701,412 RAC: 0 |

Consistent work lengths are not really a requirement. Fairly consistent estimates are. John, to clarify... "consistent" estimate to me would be "all the same", but I don't think that's what you mean. I think you mean "accurate" estimates. Not in terms of absolute time, but in, well, consistency... um... yeah... with the actual times. So it doesn't matter if the projects have 1 hour WUs and 2 hour WUs and 10 hour WUs, as long as the estimates _on_ those WUs are z, 2z, and 10z. The fact that "z" is not 3600 seconds is what the DCF adjusts for. My complaint with inconsistent work lengths was that ALL the estimates say "z". Please do not pad the work as that wastes CPU time Bad choice of words on my part. "Pad" implies fluff, wasting time; my meaning was "if the project goal is to do a million widgets, and we have decided that five widgets per work unit is reasonable, but in _this_ work unit, the first widget finished in three seconds, instead of only doing five, do six". It's useful work, just more of it accomplished "now" because of the conditions, instead of being done "later" in a different work unit. All evidence to the contrary, English really _is_ my native language...

|

|

David Baker Volunteer moderator Project administrator Project developer Project scientist Send message Joined: 17 Sep 05 Posts: 705 Credit: 559,847 RAC: 0 |

6. Have CONSISTENT work, or estimate correctly. BOINC is designed, especially with the Result Duration Correction Factor, for work units that all take 'close' to the same length of time. A factor of even 2x between one result and the next, without a corresponding adjustment to the estimate, is too much. If you have one block of results to be released that will take 2x as long as the block before, increase the estimate - this will reduce the impact on the participants. If the nature of your work is such that you _cannot_ estimate how long a result will take, then some method should be worked out of "padding" or "truncating" the work. Example; if you normally do something five times in a result, and after three such 'blocks', the time it's taken to get this far is longer than normal, consider only doing four blocks instead of five. If after doing all five the elapsed time is considerably _shorter_ than normal, then do a sixth. (Comment... Rosetta is real marginal on this point. Thus the example chosen. :-) ) BAD example of this, from SZTAKI - the code originally was such that all results took about the same length of time. A way was found to identify early in the run that it was going to fail, so (good idea) they terminated the result at that point rather than wasting time going to completion. The bad part is (see #1-3) they didn't tell anyone they were going to do this until after the fact. And, it would have been much nicer if every result included _two_ "things to test", the second of which would only be tested if the first "failed off" early. If both failed off, the result would still be short, but this would happen less often. As it is, DCF is worthless now for SZTAKI. We are guilty of this as well. In the recent sets of runs, we truncate full atom refinement early (after 5% and 40% of the run time) if the energy is high enough that it is unlikely the refinement will yield a low energy structure. There are ten independent runs in each work unit, and depending on how many refinement calculations get terminated early (on average 50% at the first check, and 50% of the remainder at the 2nd check) there will be a reasonable amount of variation in the total time (the variation is muted a bit compared to SZTAKI perhaps because there are ten independent calculations per WU). We will try to put in logic to keep the total run time as constant as possible. |

|

Scribe Send message Joined: 2 Nov 05 Posts: 284 Credit: 157,359 RAC: 0 |

Do not do 'filler' work - UD has run out of Cancer so it is endlessly repeating the same WU's over and over and over.....

|

Tern TernSend message Joined: 25 Oct 05 Posts: 576 Credit: 4,701,412 RAC: 0 |

My (new) understanding of the Rosetta logic is that each WU runs ten "sets" of operations, with each one being based on a random seed. Per the description of discarding at 5% or 40%, worst case on the low end is 1/20 the "full" time. (20 to 1 ratio in lengths; WAY too much.) If the random seeds are generated by the server, it is a bit difficult, but not impossible, to get consistent run times; however, if the seed is generated on the host, it _should_ be fairly simple to _approach_ consistency in the run times... Assume a "set" if completed takes "x" time. On the host side: If a "set" is discarded at the 5% mark, add 0.95 to a counter. If a "set" is discarded at the 40% mark, add .6 to this counter. If it completes, do nothing to the counter. After doing the 10th "set", check this counter; (label "A" here) if the counter is > 1, run another "set". If _that_ set is discarded at the 5% mark, subtract .05 from the counter; if it is discarded at the 40% mark, subtract 0.4 from the counter. If it completes, subtract 1 from the counter. Go to label "A". If my math is right, (somebody check me, it's late...) then the "best case" from the project side is if _all_ of these are discarded at 5%. The first 10 add up to where the counter is 9.5. The loop then executes _170_ times before the program completes; the project has 180 "sets" to enter in the database, and the time the result took is 180*.05=9x. Likewise, if all "sets" are discarded at 40%, after the first 10, the counter is 6. The loop then executes 12 more times, giving the project 22 "sets", and the time is 22*0.4=8.8x. If all sets run to completion, you get 10, time is 10x. From the participant's viewpoint, the results range from 8.8x to 10x in time - a very small range. Compared to today, where the range is 0.5x to 10x. (Somebody needs to figure all the possibilities, in case some strange combination gives a spike on either side... I've only considered the extremes.) The server must be prepared to accept anywhere from 10 to 180 "sets" from the host, instead of always expecting 10. That's the only server-side change I think is needed. I'm sure I could improve on the algorithm to both tighten the range and tweak the last possible set out of it, but my eyes won't stay open... Also, we need to know from the project the _average_ current time in terms of "x"; is it close to 10x? Closer to .5x? The initial count of 10 may need to change; it would be nice to keep the results fairly "short", somewhere around the current average time. If most "sets" are discarded at 5% today, and the current 'normal' time is .5x, the last thing I want to do is increase that by a factor of 20. Rosetta takes just over an hour on a reasonably fast PC, which is just about "right", at least to me. If the seeds are generated on the server, this could still work, by generating 180 such seeds for each WU, and reporting the last 170 of them back (worst case) as "not done". Any that were not done would be re-issued in another WU later... No filler, no padding, just either more or less actual work being done in each WU based on the time it takes!

|

|

David Baker Volunteer moderator Project administrator Project developer Project scientist Send message Joined: 17 Sep 05 Posts: 705 Credit: 559,847 RAC: 0 |

My (new) understanding of the Rosetta logic is that each WU runs ten "sets" of operations, with each one being based on a random seed. Per the description of discarding at 5% or 40%, worst case on the low end is 1/20 the "full" time. (20 to 1 ratio in lengths; WAY too much.) If the random seeds are generated by the server, it is a bit difficult, but not impossible, to get consistent run times; however, if the seed is generated on the host, it _should_ be fairly simple to _approach_ consistency in the run times... This would certainly equalize the work unit times. alternatively, since we will soon be doing calculations with many different proteins which may take somewhat different amounts of time, if there is a way of querying the total run time that works on all platforms, after each trajectory is finished, before starting the next one, we could check the elapsed time and if it is greater than 6 hours, we could skip the remaining runs. |

nasher nasherSend message Joined: 5 Nov 05 Posts: 98 Credit: 890,793 RAC: 5 |

honestly whatever works to help the science work better is what we want.. i like the list and agree comunication isnt listed enough. thats one flaw i havent found here there is lots of comunication and understanding personaly except for the minor problems of recievig no work errors from some of my computers this week i havent had any serious complaints about this project and its geting the lions share of my computers time Nasher

|

Hoelder1in Hoelder1inSend message Joined: 30 Sep 05 Posts: 169 Credit: 3,915,947 RAC: 0 |

This would certainly equalize the work unit times. alternatively, since we will soon be doing calculations with many different proteins which may take somewhat different amounts of time, if there is a way of querying the total run time that works on all platforms, after each trajectory is finished, before starting the next one, we could check the elapsed time and if it is greater than 6 hours, we could skip the remaining runs. Ingleside stated in the "code release and redundancy" thread that "win9* doesn't know anything about cpu-time" (here). But I guess 'wall time' would also do for this purpose - though of course pseudo-redundancy and variations thereof (as discussed in the c.r. & r. thread) won't work anymore with this kind of approach ... |

Paul D. Buck Paul D. BuckSend message Joined: 17 Sep 05 Posts: 815 Credit: 1,812,737 RAC: 0 |

We are guilty of this as well. In the recent sets of runs, we truncate full atom refinement early (after 5% and 40% of the run time) if the energy is high enough that it is unlikely the refinement will yield a low energy structure. There are ten independent runs in each work unit, and depending on how many refinement calculations get terminated early (on average 50% at the first check, and 50% of the remainder at the 2nd check) there will be a reasonable amount of variation in the total time (the variation is muted a bit compared to SZTAKI perhaps because there are ten independent calculations per WU). We will try to put in logic to keep the total run time as constant as possible. Um, wait a moment guys ... Early death on work is fine by me. In SETI@Home it is -9 noisy; in LHC it is BAM!, hit the wall; RAH, well, what David said ... I want a reasonable number for the work so I get the appropriate amount. If it ends early, fine. Stuff happens and there is no point in going onward ... But, I agree, aside from Oopsies, if the work is going to take 9 hours it would be nice to know that up front. PPAH has been sending out work that runs for days with 3 hour estimates. When I see one of those it gets an early death ... ---- Secondly, As one other person also said, don't lock up my machine. I know you are working on the 1% problem, and am willing to give you time to get there, and better yet, I have not seen one in a long time ... but, don't get complacent. I don't know how to simulate the problem, but, there should be a reasonble way to detect obviously long run times. I think, though we WANT to deny it, that the frustration comes from we do 6 hours work and not only DON'T get a restult to return, we don't earn our time for the effort. I think we can live with the loss of occasional work due to failure to validate ... but, the only way I can measure my contribution to your science is with the credit scores ... |

|

Scott Brown Send message Joined: 19 Sep 05 Posts: 19 Credit: 8,739 RAC: 0 |

Nice list Bill, although I agree that you haven't emphasized communication enough. Also in response to your (and Paul Buck's) comments about EAH, as a university professor myself I'd like to point out that we are at the end of the semester (in the U.S. at least). This means that someone like Bruce Allen is probably swamped with various administrative tasks before the holiday break (e.g., exams, etc.). A short drop-off in the communication frequency should be expected (as well as with some of the other U.S. university-based projects). |

Message boards :

Number crunching :

How to have the best BOINC project.

©2026 University of Washington

https://www.bakerlab.org