No work from project

Message boards : Number crunching : No work from project

| Author | Message |

|---|---|

|

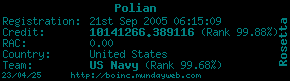

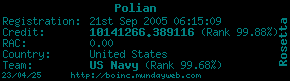

Polian Send message Joined: 21 Sep 05 Posts: 152 Credit: 10,141,266 RAC: 0 |

1/11/2006 4:52:25 PM|rosetta@home|Sending scheduler request to https://boinc.bakerlab.org/rosetta_cgi/cgi I remember reading somewhere about a possible memory leak in the BOINC feeder. Would it be possible to kill that process on the server end every so often so we can keep the "No work" messages at bay? Just wanting to keep my machines busy :)

|

|

Scribe Send message Joined: 2 Nov 05 Posts: 284 Credit: 157,359 RAC: 0 |

Why worry? I get the message a couple of times per day but the next request a couple of minutes later it gets work?

|

|

Polian Send message Joined: 21 Sep 05 Posts: 152 Credit: 10,141,266 RAC: 0 |

|

Paul D. Buck Paul D. BuckSend message Joined: 17 Sep 05 Posts: 815 Credit: 1,812,737 RAC: 0 |

As far as I know, the only discussion about the feeder is the one that I am conducting (though at the moment I seem to be the only participant in the discussion :( ...). In NONE of that is an issue about a memory leak. However, there are potential problems with the feeder and the scheduler (more precicely the scheduler instances) which can cause the feeder to be abnormally low of work. One of the issues has been patched (scheduler side) but I think that this fix is not complete and will not solve all of the issues particularly under high server loads. Not a condition experienced here at Rosetta@Home. The other issue is that each "fill" pass performed on the feeder has an issue as results are pulled from the enumeration of the results to be sent and thier allocation to the feeder array. The feeder finds an empty "slot", pulls a result from the eneumeration, tests for collision, if the result is already in the array the current code now advances to the next empty slot to try again rather than to test a new result from the enumeration until it finds one that does not already exist within the current feeder array. How big of a problem this is is unknown because the logging of the feeder state is, um, stinky. Just this morning I added to my list of critiques of the feeder/scheduler with a suggestion to add a timed report dump to the feeder logs, and that maybe this could be information added to the status page (suggested 15 minute cycle time). I believe that this is a fairly severe problem in the sense that it affects a number of projects, of which Rosetta@Home is one, PPAH is another (they raised the feeder queue size to avoid the issue), and of course SETI@Home. SAH has gone so far as to stop and restart the feeder to avoid these issues; which of course dumps the entire content of the feeder array allowing a complete fill with no collisions until collisions begin to add up ... I cannot prove it, but, the no work/there was work for other platforms message set are both symptoms of these problems. Especially with work being available within moments of the no work, and there being plenty of work units "cut" and ready to be issued. So, there is a problem or three, none of them are a memory leak however ... :) If you want the WHOLE story you can look on the developer's mailing list, or I can post it all here including the code .... :) |

Paul D. Buck Paul D. BuckSend message Joined: 17 Sep 05 Posts: 815 Credit: 1,812,737 RAC: 0 |

Usually. This is the only project I run and one of my boxes was idle for several hours when I looked after I got home from work. The time between scheduler requests increases drastically after the first few failures in a row. This is the exponential back off mechanism and it is intended to limit the flooding of the servers for those cases where the server does not have work ... |

|

Polian Send message Joined: 21 Sep 05 Posts: 152 Credit: 10,141,266 RAC: 0 |

Thanks for the info, Paul. Perhaps I'm confusing issues with the memory leak, or maybe *my* memory is just failing :) I do agree with you that it is a severe problem. Probably not as severe for people that are running multiple projects. I would join the dev list, but I probably wouldn't understand any of the dialogue :)

|

Paul D. Buck Paul D. BuckSend message Joined: 17 Sep 05 Posts: 815 Credit: 1,812,737 RAC: 0 |

Thanks for the info, Paul. Perhaps I'm confusing issues with the memory leak, or maybe *my* memory is just failing :) I do agree with you that it is a severe problem. Probably not as severe for people that are running multiple projects. I would join the dev list, but I probably wouldn't understand any of the dialogue :) I think you would be surprised. Most of it is not that technical. And, (well, at least I do) you might find it interesting. It is one of the "tea leaves" *I* use to keep an eye on where BOINC is going ... The last "leak" issue I am aware of was the one where BOINC was not handling the connections right and this caused communication problems over time. |

|

Scribe Send message Joined: 2 Nov 05 Posts: 284 Credit: 157,359 RAC: 0 |

Lots of these and the WU's in queue on the Home page is very very very low......

|

|

David Baker Volunteer moderator Project administrator Project developer Project scientist Send message Joined: 17 Sep 05 Posts: 705 Credit: 559,847 RAC: 0 |

Lots of these and the WU's in queue on the Home page is very very very low...... we are just submitting a large batch of WU for the most promising method based on the last few weeks of tests. I didn't anticipate how fast the queue would be emptied! The reason this happened is that we want to have maximum flexibility to submit new jobs based on the analysis of the latest results, and so we are only submitting several days worth of jobs at a time. |

Nite Owl Nite OwlSend message Joined: 2 Nov 05 Posts: 87 Credit: 3,019,449 RAC: 0 |

Lots of these and the WU's in queue on the Home page is very very very low...... That's what I'm looking for, several days work.... Right now 65% of my 30 machine farm is sitting idle looking at "No work from project".... |

|

Morphy375 Send message Joined: 2 Nov 05 Posts: 86 Credit: 1,629,758 RAC: 0 |

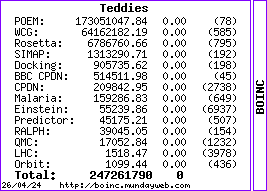

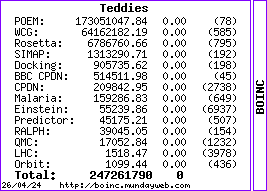

"Ready to send 3,664" . Looks good..... Teddies.... |

Nite Owl Nite OwlSend message Joined: 2 Nov 05 Posts: 87 Credit: 3,019,449 RAC: 0 |

"Ready to send 3,664" . Looks good..... Must have already been grabbed in a split second because there sure isn''t any there now.... |

|

Morphy375 Send message Joined: 2 Nov 05 Posts: 86 Credit: 1,629,758 RAC: 0 |

"Ready to send 3,664" . Looks good..... Just go to "server status" in the lower left.... I'm dowloading jobs right now... ;-)) Edith: The status on the main page is almost one hour behind.... /Edith Teddies.... |

|

Scribe Send message Joined: 2 Nov 05 Posts: 284 Credit: 157,359 RAC: 0 |

Yup, I am downloading also..... |

Nite Owl Nite OwlSend message Joined: 2 Nov 05 Posts: 87 Credit: 3,019,449 RAC: 0 |

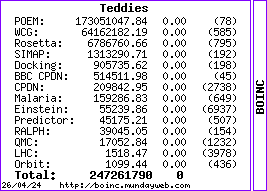

"Ready to send 3,664" . Looks good..... It must favor Germans and Brits then... I just got the ol stand-by: "No work from project".... Join the Teddies@WCG

|

|

Morphy375 Send message Joined: 2 Nov 05 Posts: 86 Credit: 1,629,758 RAC: 0 |

"Ready to send 3,664" . Looks good..... :-(( Take a little nap Owlie.... Teddies.... |

|

Scribe Send message Joined: 2 Nov 05 Posts: 284 Credit: 157,359 RAC: 0 |

Now over 9,000 ready to send....

|

Message boards :

Number crunching :

No work from project

©2026 University of Washington

https://www.bakerlab.org